Last-Mile Targeting in Ukraine’s Drone War: AI, Edge Computing, and the Limits of Autonomy

by Benjamin Cook

As Ukraine pioneers modern drone warfare under conditions of intense electronic warfare (EW), the last mile of targeting—the final distance before a drone engages its target—has emerged as a decisive technical and operational bottleneck. This paper examines how Ukraine uses artificial intelligence (AI), edge computing, and human-in-the-loop (HITL) systems to manage last-mile targeting in a contested battlespace. It explores the role of edge-based inference, the operational viability of fiber-optic drones, and the cost-driven limitations of full automatic target recognition (ATR). The argument is straightforward: while AI assists in tracking and stabilization, the economic and technical burdens of full autonomy make HITL the only scalable option on the battlefield today.

Introduction

Drones have become central to Ukraine’s defense strategy, enabling reconnaissance, adjusting artillery fire, and carrying out precision strikes. Among the most critical and technically challenging aspects of drone warfare is last-mile targeting—the final seconds before a munition is delivered to its target. In this phase, environmental complexity, electronic warfare, and system limitations converge.

The core challenge is simple: autonomous target acquisition at scale is not yet a battlefield reality. While artificial intelligence can assist with visual recognition and flight path correction, current systems deployed in Ukraine cannot consistently maintain or reacquire targets under real-world conditions that include spoofing, occlusion, rapid movement, and visual ambiguity.

In Ukraine's FPV drone ecosystem, the problem is compounded by GPS jamming, RF interference, and other degraded signal environments, particularly near the front lines. These conditions disrupt remote control links and limit situational awareness. As a result, many Ukrainian drones are designed with a human-in-the-loop (HITL) model—not because AI systems stop functioning, but because fully autonomous targeting is not economically or logistically feasible at scale. HITL offers a scalable compromise: AI may assist with navigation or visual tracking, but human operators remain responsible for final targeting decisions.

This following examines how Ukraine has adapted to these operational constraints. It begins with a technical analysis of AI use in target identification and flight stability, explores the deployment of edge computing to process data locally in jammed environments, and contrasts this architecture with the use of fiber-optic drones that bypass RF links altogether. It also evaluates the promise and limitations of automatic target recognition (ATR) systems, which remain constrained by compute resources, training cycles, and the adversarial nature of the battlefield.

Hate Subscriptions? Me too! You can support me with a one time contribution to my research at Buy Me a Coffee. https://buymeacoffee.com/researchukraine

AI in Target Identification and Persistence

Artificial intelligence plays a growing role in Ukraine’s drone operations, particularly in target acquisition, object classification, and terminal tracking. Contrary to popular assumptions, AI is not delivering autonomous engagement. It is being used tactically to augment human control during the most demanding phase of flight: the final approach.

1. Narrow AI for Narrow Tasks

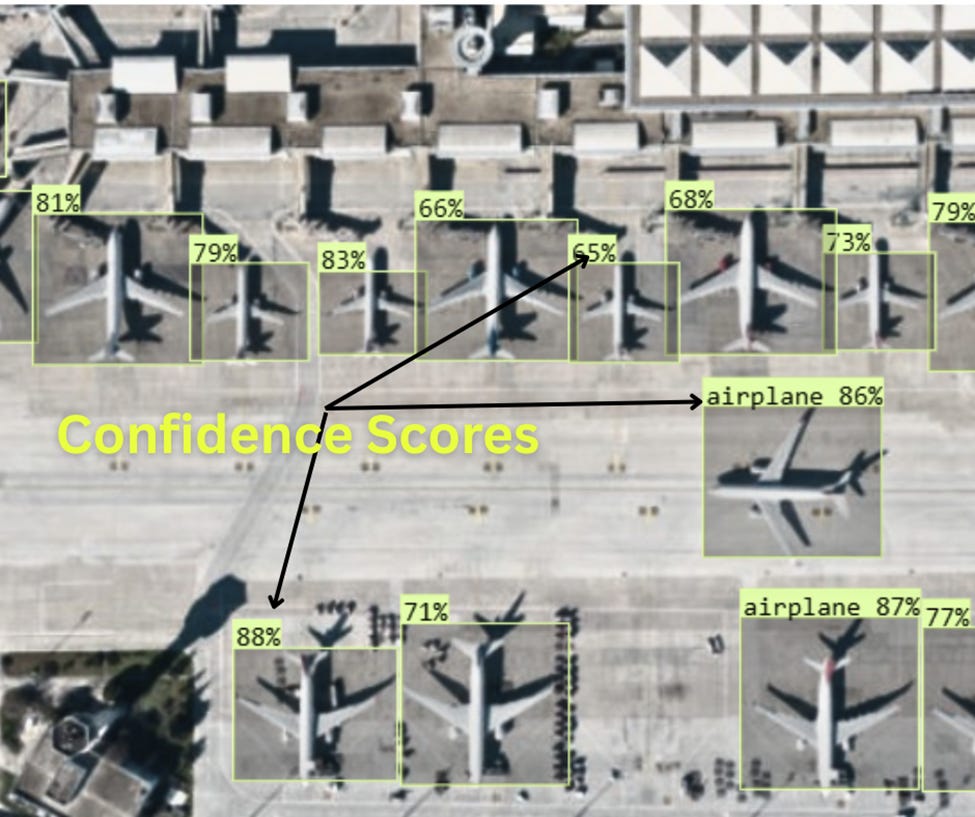

Ukrainian drone developers use lightweight, task-specific models designed to assist—but not replace—the operator. Most implementations rely on adapted versions of models like YOLO (You Only Look Once), tuned to battlefield datasets to recognize personnel, vehicles, and artillery from FPV or gimbaled cameras. Segmentation and bounding-box classification tools allow for rapid visual tagging of potential targets under diverse conditions, including urban clutter, snow, and foliage.

These models do not run in the cloud. They operate on constrained edge devices like Raspberry Pi, Jetson Nano, or custom-built microcomputers, optimized for inference speed, thermal efficiency, and power consumption. The focus is on real-time classification and visual tracking, not autonomy.

Training these models from scratch is generally infeasible. Ukrainian units instead fine-tune open-source architectures using battlefield footage and limited annotated datasets. This allows for rapid deployment, but also imposes constraints on model complexity and generalizability.

“Ukrainians have created an AI for drones that automatically identifies and strikes targets,” The Odessa Journal, 2024. https://odessa-journal.com/ukrainians-have-created-an-ai-for-drones-that-automatically-identifies-and-strikes-targets.

“Trained on classified battlefield data, AI multiplies effectiveness of Ukraine’s drones,” Breaking Defense, March 2025. https://breakingdefense.com/2025/03/trained-on-classified-battlefield-data-ai-multiplies-effectiveness-of-ukraines-drones-report.

2. Target Persistence in Terminal Phase

One of the most critical uses of AI occurs during last-mile targeting, when an operator identifies a target, often by placing a bounding box or similar marker. At that point, the onboard AI takes over—using optical flow algorithms and frame-to-frame correlation to maintain lock during high-speed approach or brief occlusions. This handoff reduces pilot workload and allows for more accurate final corrections, especially when RF signal quality is degraded or when terrain and movement introduce visual noise.

III. Edge Computing Under Battlefield Constraints

The use of AI in last-mile targeting depends entirely on one prerequisite: the ability to process data locally. In the Ukrainian theater, drones cannot rely on continuous cloud access, remote servers, or offboard computation. RF links are unreliable, and jammed environments are the norm. This has pushed Ukrainian drone developers toward a distributed architecture where inference must occur on the edge—onboard the drone itself.

“Drones with edge AI: The future of warfare,” EE Times Europe, 2023. https://www.eetimes.eu/drones-with-edge-ai-the-future-of-warfare.

1. Why Edge Computing Is Required

Ukrainian drone operations face consistent GPS denial, radio frequency jamming, and occasional loss of visual data transmission. Even where RF links are maintained, bandwidth is limited and latency is unpredictable. These conditions rule out remote processing or any dependency on external AI feedback loops.

Instead, all computationally critical functions—object detection, target tracking, navigation corrections—must be executed onboard. This enables the drone to maintain functionality even when signal drops occur or when the pilot is executing via video lag or partial blackouts.

2. Hardware and Software Tradeoffs

Edge-capable drones are constrained by three interrelated factors: power availability, thermal limitations, and compute resources. Drones equipped with onboard AI typically use microcomputers or compact modules that support inference frameworks like TensorFlow Lite or ONNX Runtime, stripped down for embedded deployment.

TensorFlow Lite documentation. https://www.tensorflow.org/lite.

This creates a necessary tradeoff: models must be small enough to run efficiently without overheating the board, draining the battery, or delaying response times. High-confidence object detection might require frame skipping or reduced resolution to maintain real-time responsiveness in a maneuvering drone.

IV. Fiber-Optic-Controlled Drones: Benefits and Limitations

While most Ukrainian FPV drones rely on radio frequency (RF) links for control, there is a growing class of systems that operate using fiber-optic cables to provide a direct, unjammable physical connection between operator and drone. These systems are a direct response to the increasingly dense and sophisticated electronic warfare (EW) environment, particularly in frontline zones where RF jamming is persistent and aggressive.

“They cannot be jammed: Fibre-optic drones pose new threat in Ukraine,” The Guardian, April 2025. https://www.theguardian.com/world/2025/apr/23/they-cannot-be-jammed-fibre-optic-drones-pose-new-threat-in-ukraine.

1. Why Fiber Is Used

Fiber-optic drones bypass the vulnerabilities of wireless communication. Because they transmit control signals through a physical medium, they are effectively immune to RF jamming and spoofing, making them highly desirable for high-assurance strikes in areas where even low-bandwidth video or command signals are disrupted. Additionally, fiber optic drones can go where last mile targeting just can’t. Fiber drones can sit idle waiting for a target. They can creep slowly through trenches, homes, and treelines. AI assisted last mile targeting would not be preferred in any of these scenarios.

2. Operational Constraints of Fiber-Optic Drones

Despite their resilience to jamming, fiber-optic drones come with significant tactical limitations:

● Cable Management and Drag: Most fiber-optic drones carry a spool onboard and unwind cable during flight. As the distance increases, so does cable mass, which contributes to aerodynamic drag.

● Agility Limits: The physical tether imposes constraints on turn radius, pitch control, and speed stability, particularly in urban environments or cluttered terrain.

● Terrain Hazards: In areas with trees, buildings, or debris, the fiber line itself is a point of failure. Snags or friction from structures can shear the cable, terminating control mid-mission.

Nonetheless, these drones have demonstrated effective ranges of up to 20+ kilometers, depending on the design and mission profile.

V. Automatic Target Recognition (ATR): Capabilities, Costs, and Constraints

Automatic Target Recognition (ATR) refers to a system’s ability to autonomously identify, classify, and prioritize targets without human input during engagement. While ATR is used in some high-end Western systems, its use is typically narrow and tightly scoped. One clear example is the home-on-jam missile, which detects and homes in on hostile electronic emissions using onboard sensors. This is a form of ATR, but it relies on defined signal parameters and binary engagement logic.

RAND Corporation, “Autonomy in Weapon Systems: Applications and Challenges,” 2022. https://www.rand.org/pubs/research_reports/RRA737-1.html.

1. What ATR Requires

Effective visual ATR demands:

● Large, labeled training datasets representative of the operational environment.

● Significant compute resources for model training and real-time inference.

● Robust testing and validation, often in dynamic and deceptive environments.

● Hardware integration on drones capable of sustaining inference without overheating or draining batteries.

These requirements are expensive, time-consuming, and vulnerable to disruption. They do not scale well to the thousands of disposable drones Ukraine builds and deploys each month.

2. Cost and Flexibility

Training ATR systems requires human-intensive annotation pipelines, secure and redundant compute, and sustained iteration. Even when feasible, these systems are less adaptable than a human pilot. ATR is inherently brittle—easily confused by camouflage, partial occlusion, or changes in background geometry.

“Automatic Target Recognition for Military Use: What’s the Potential?” FlySight.it, 2024. https://www.flysight.it/automatic-target-recognition-for-military-use-whats-the-potential.

For a military that prioritizes adaptation, speed, and cost-efficiency, ATR remains a poor trade-off for most tactical scenarios.

The Only Real Option

Fully autonomous targeting remains out of reach for most battlefield drones—not because the underlying technology is impossible, but because it is not yet economically or logistically viable at scale. Ukraine has adopted a more pragmatic model: human operators perform the most sensitive and error-prone task—target recognition—while drones execute terminal tracking and guidance. These onboard functions rely on algorithms and methods that have existed for decades, including optical flow, frame differencing, and bounding-box persistence. They require far less computational power and can run reliably on constrained edge hardware. This architecture reflects a deliberate division of labor: the human handles what still cannot be safely or affordably automated, and the machine handles what can.

Benjamin Cook continues to travel to, often lives in, and works in Ukraine, a connection spanning more than 14 years. He holds an MA in International Security and Conflict Studies from Dublin City University and has consulted with journalists on AI in drones, U.S. military technology, and related topics. He is co-founder of the nonprofit UAO, working in southern Ukraine. You can find Mr. Cook between Odesa, Ukraine; Charleston, South Carolina; and Tucson, Arizona.

Hate Subscriptions? Me too! You can support me with a one time contribution to my research at Buy Me a Coffee. https://buymeacoffee.com/researchukraine

Mr. Cook’s Substack:

I am anxiously waiting for someone to conceptualise these very long-range targeting complexes as Human-In-The-Loop-Extended-Range systems and then struggles with the acronym. Apologies, I have nothing serious to add to this excellent article which I thoroughly enjoyed and am very grateful for.

Attacking the rail system would seem a straightforward task for ATR. Routes are finite and easy to locate, and recognizing locomotives would be a narrow task